Learning Network Games

Network games are a powerful tool for modelling strategic interactions between individuals or organisations played out on networks, where a player's payoff depends not only on their own actions but also on those of their neighbours. Such games have numerous applications in economics and social sciences, including studying the spread of influence in social networks, the dynamics of financial markets, and the formation of alliances in international relations. The study of network games typically assumes the underlying network structure to be known, which is often wishful thinking. Recently, machine learning approaches have been proposed to tackle this problem by leveraging the observed actions of players to learn the underlying network structure. In this blog post, we outline a novel approach that uses a transformer-like architecture to infer the network structure of a game without explicit knowledge of the utility function associated with the game.

This post is based on the paper Learning to infer the structure of network games

Network Games

Game theory is a mathematical framework for modelling and analysing situations where multiple decision makers interact with each other, and where the outcome of each decision depends on the actions of all players involved. In network games

Equilibrium actions refer to a set of strategies where no player has an incentive to change their strategy, given the strategies of the other players. In other words, at equilibrium, each player’s strategy is optimal, given the strategies of the other players. In network games, the equilibrium actions depend on the graph structure, along with other parameters dependent on the game.

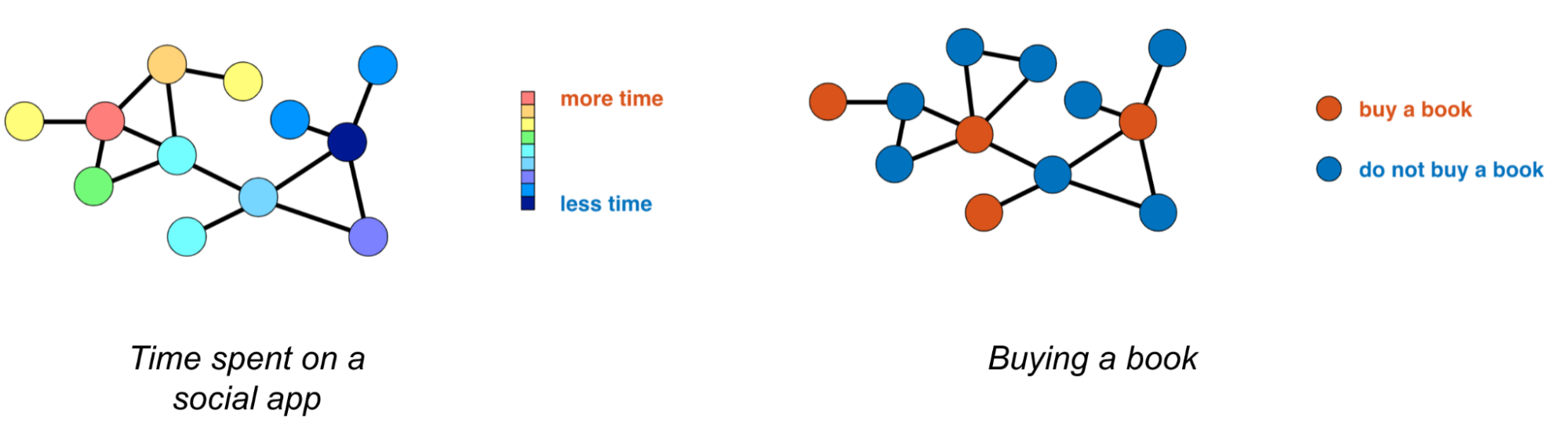

Consider, for example, a scenario where individuals on a social network can decide how much time to spend on the platform. In such a case, their behaviours may be influenced by their friends on the network, which creates a strategic interdependence between players. For instance, if Joe’s friends spend a lot of time on the platform, Joe might perceive a greater benefit from using the platform himself.

In a different setting, Joe is a user of an e-commerce platform deciding whether to buy a book. If his friend has already purchased the book, Joe might be less likely to buy it, as he can borrow it from his friend. These examples illustrate how actions in network games can be affected by the actions of neighbouring players, leading to strategic interdependence and the emergence of equilibrium actions.

Inferring the Network from the Actions

In the above examples, we assumed to know the friends of Joe, i.e., the network structure of the game. However, in many situations, the underlying network structure is not directly available to us. Instead, we may only observe the equilibrium actions that result from the interactions between agents. In these cases, a crucial question is whether we can reconstruct the network structure based solely on these equilibrium actions. Knowing the network structure can be helpful in predicting behaviour and planning network-based interventions, such as marketing campaigns or information diffusion.

It was previously shown that, under specific assumptions about the mathematical form of the utility function and game types, it is possible to reconstruct the graph governing the network game

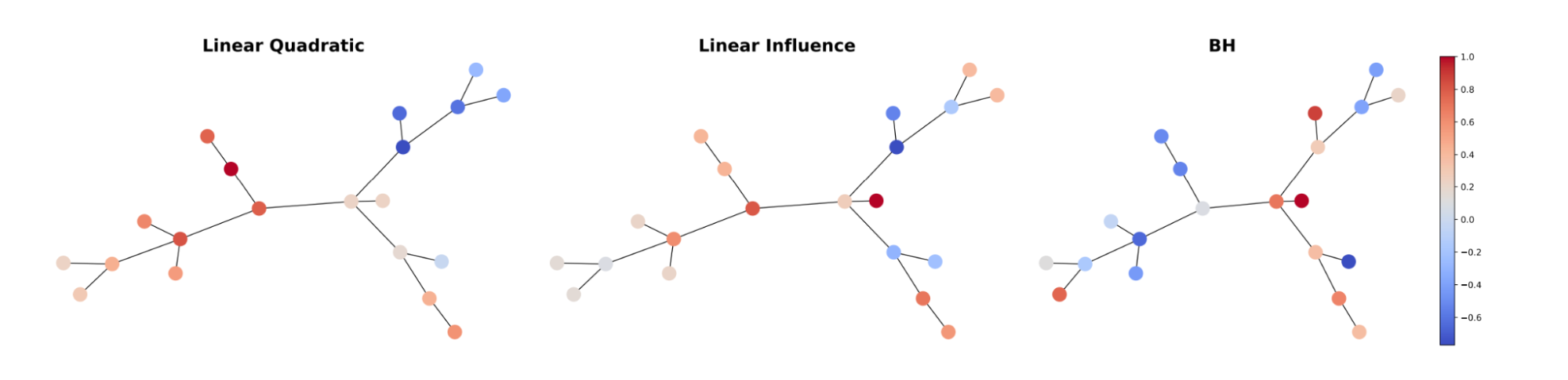

We start by studying three common types of network games, Linear Quadratic, Linear Influence, and Barik-Honorio

For example, Linear Quadratic games have the following utility function:

\[u_i = b_i x_i -\frac{1}{2} x_i^2 + \beta \sum_{j \in \mathcal{N}_i} a_{ij} x_i x_j\]where \(x_i\) is the continuous action taken by player $i$, \(b_i\) is the player’s marginal benefit, \(\beta\) is a game parameter representing the strength of dependencies between actions of neighbours in the network, and \(a_{ij}\) is the entry in the adjacency matrix of the graph representing the strength of the connection between \(i\) and \(j\). The first term represents the marginal benefit for taking a larger action, the second (quadratic) term represents the cost for taking the action, while the third term represents the relation with the neighbours actions

The pure-strategy Nash equilibrium of Linear Quadratic games is:

\[\mathbf{x}^* = \big( \mathbf{I} - \beta \mathbf{A} \big)^{-1} \mathbf{b}\]where \(\mathbf{x}\)* is a vector of dimension \(n\) (equal to the number of players, or nodes of the graph), \(\mathbf{A}\) is the unknown \(n \times n\) adjacency matrix of the graph, \(\mathbf{b}\) is the \(n\)-dimensional vector of marginal benefits of the players.

Similar formulas can be derived for Linear Influence and Barik-Honorio games. A formula for the equilibrium actions \(\mathbf{x}^*\) that generalizes all three games has the form

where the function \(\mathcal{F} (\mathbf{A})\) accounts for the influence from the actions of one’s neighbours in the network and encodes the specific utility function of the game, and conversely, \(\mathcal{H} (\mathbf{b})\) is only affected by one’s characteristics, such as the marginal benefit of an individual player.

In the paper we further show

Machine Learning Approach

To tackle the problem of inferring the network structure of a game, we approach it as a machine learning problem. We train a model to map the players’ actions to the network structure of the game, without any prior knowledge of the underlying utility function. To achieve this, we gather a dataset of actions and network pairs (\(\mathbf{x}\), \(\mathbf{A}\)) from games played with the same utility function (although this function is unknown to us). This allows us to avoid making strong assumptions about the utility function and instead train a model that is agnostic to it.

Such an approach is particularly useful in scenarios where social network and decision data exist for a small population, and we aim to learn the mapping from decisions to the network structure of a larger population. For instance, governments, public agencies, and researchers can collect social network data on a small population by asking individuals to nominate their friends, and then use the proposed method to learn the network interactions for a larger population in a cost-effective manner.

Our ML model has an encoder-decoder architecture that is invariant to the permutation of both the players and the games, corresponding to the rows and columns of the \(n \times K\) matrix \(\mathbf{x}\), where \(k\) denotes the number of games. To achieve this, we modify a Transformer model, which is naturally permutation-invariant over the set of nodes but not over the set of games.

Our encoder produces \(K\) vectors for each player as follows:

The scalar action \(\mathbf{x}_{ik}\) of player \(i\) for game \(k\) is first passed through a non-linear transformation resulting in an \(F\)-dimensional vector

\[\mathbf{y}_{ik} = \text{ReLU}(\mathbf{x}_{ik}\mathbf{W} + \mathbf{b})\]We then calculate the unnormalised attention scores

\[s_{ij} = \sum_{k} \mathbf{y}_{ik}^T \mathbf{W} \mathbf{W}_k \mathbf{y}_{jk}\]between players \(i\) and \(j\) by first computing per-game scores using a ‘learned dot-product’ with query and key weight matrices \(\mathbf{W}\) and \(\mathbf{W}_k\) as in the original Transformer

The attention scores

\[\alpha_{ij} = \text{softmax}_{j}(u_{ij})\]are obtained by taking the softmax over the unnormalised scores over the second dimension.

Finally, the \(F\)-dimension embedding

\[\mathbf{z}_{ik} = \phi\left(\sum_{v} \alpha_{ij}\mathbf{y}_{jk}\right)\]of node \(i\) for game \(k\) is obtained by aggregating the \(\mathbf{y}_{ik}\) vectors of other nodes weighted by the attention scores, before passing the result through a 2-layer MLP \(\phi\).

The decoder outputs probabilities for each entry of the adjacency matrix by aggregating the \(k\) vectors for players \(i\) and \(j\). This is done by taking the dot product of the two vectors for each game and summing the results before feeding them into a multilayer perceptron (MLP):

\[\hat{a}_{ij} = \psi\left(\sum_{k} \mathbf{z}_{ik} \odot \mathbf{Z}_{jk}\right)\]where \(\odot\) represents the dot product and \(\psi\) is a 2-layer MLP.

The resulting model is permutation-invariant over the set of games. This means that it produces the same predicted adjacency matrix regardless of the order in which the games are presented. To achieve this, the model computes separate node embeddings for each game and aggregates them through summation, which is a permutation-invariant operation. In practice, this property of the model ensures that we obtain consistent and reliable predictions, regardless of how the input data is ordered.

Experimental Results

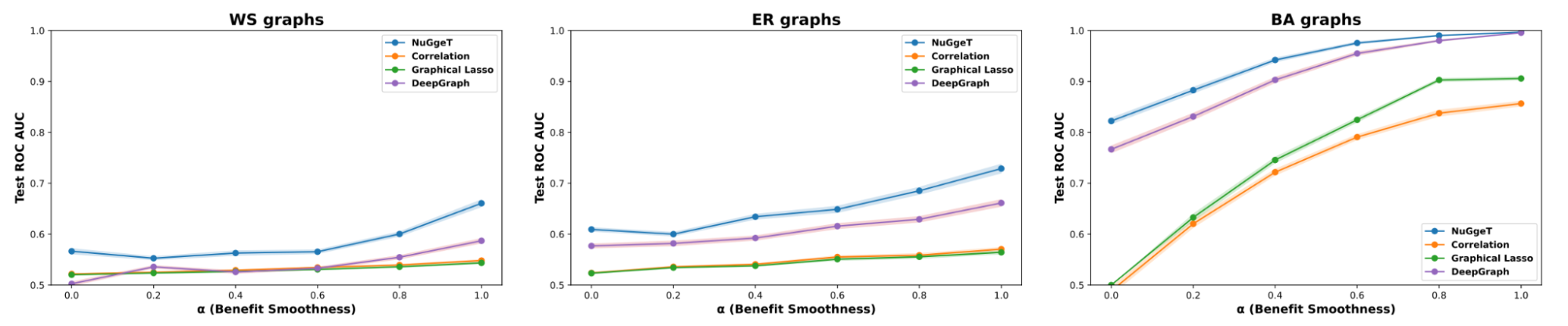

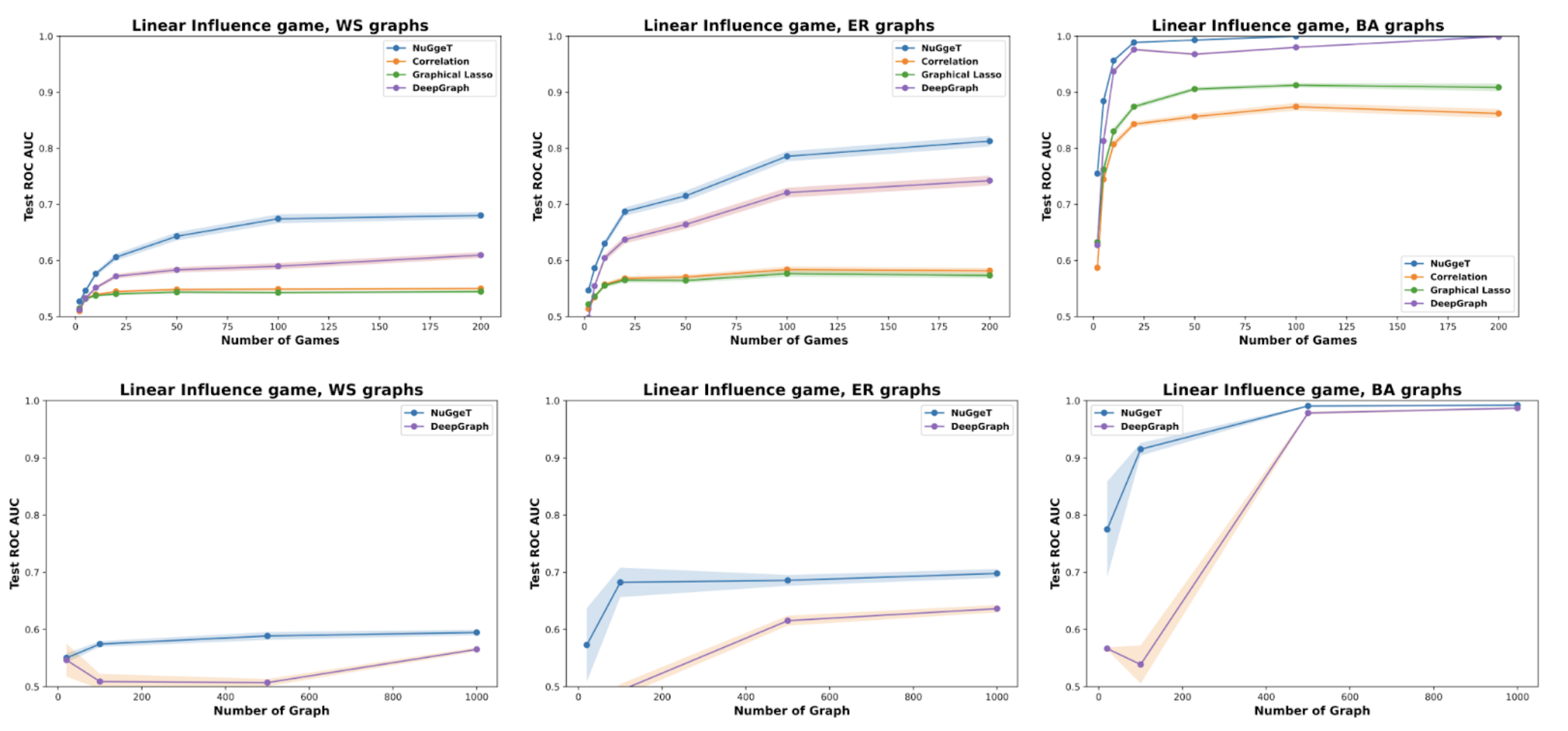

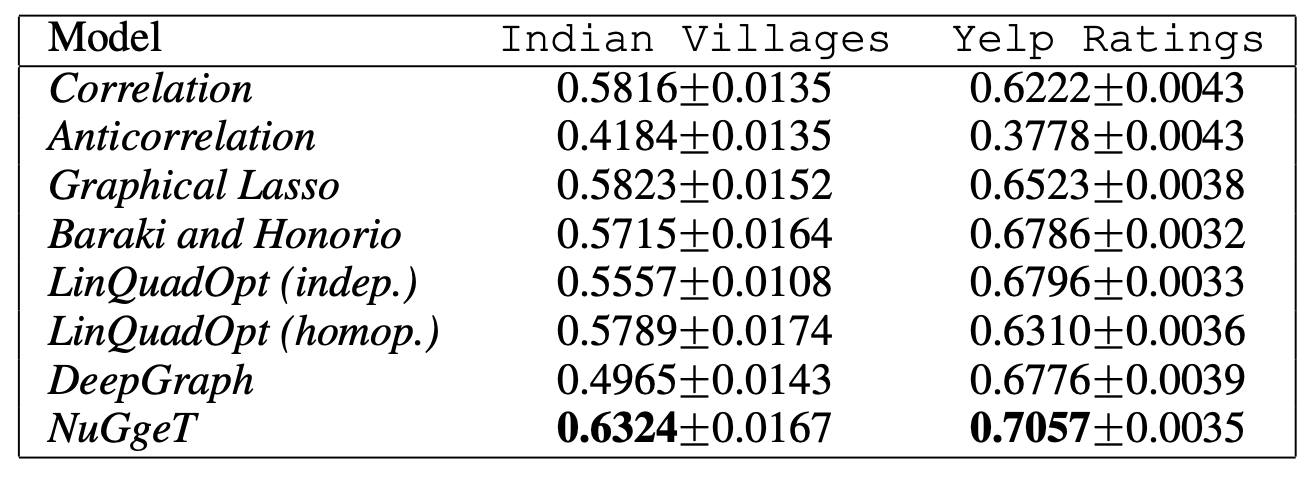

We conducted experiments to validate the effectiveness of our approach in learning the network structure from players’ actions, using both synthetic and real-world datasets. As baselines, we used DeepGraph

On synthetic datasets, our model, NuGgeT, consistently outperformed previous methods across a range of different games and graphs types.

We further validated our approach on two real-world datasets: the Indian Villages dataset

The Yelp Ratings dataset consists of user ratings of businesses and social connectivity between users. We extracted 5000 sub-graphs representing communities from the raw data, where the actions were the average rating of users for 22 categories of businesses.

On both real-world datasets, NuGgeT outperforms previous methods, showcasing the efficacy of our approach in cases where the game utility is not explicitly known. The gain is particularly large on the Indian Villages dataset, where the competing DeepGraph method fails to learn altogether. We conjecture this is due to NuGgeT being more data-efficient thanks to its built-in invariances, as confirmed by the above ablation over the number of training graphs.

In conclusion, our paper highlights the fruitful connection between game theory and graph machine learning, particularly in the context of network games. By developing a new machine learning approach to infer network structure from observed game outcomes, we show the potential for utilising game theory ideas to enhance machine learning and vice versa. Looking forward, there is ample opportunity to explore further connections between network games and graph neural networks, paving the way for more exciting developments in these fields.

Enjoy Reading This Article?

Here are some more articles you might like to read next: